Document details

Abstract

Space debris in the Low Earth Orbit (LEO) region will eventually lose altitude due to friction with the upper atmosphere, eventually undergoing atmospheric reentry. For the large space objects or large fragments of them, which do not burn in the atmosphere, the exact time of the reentry, and the location on Earth of the object’s impact are difficult to predict, therefore precise and repeated observations are needed to update the state of the object. The orbital parameters of the decaying orbit become quickly obsolete, and the predicted position based on these parameters can be outside of a telescope’s field of view. A wide field of view instrument, even if it is of a lower angular accuracy, can observe objects falling behind or ahead the predicted position by more than a chronological minute, and can update the orbital parameters in real time to ensure that the narrow field of view instrument is properly oriented.

The wide field of view system is based on an off the shelf DSLR 24-megapixel camera equipped with a 20 mm lens. The effective field of view is 60x40 degrees, operating in staring mode. The system performs, in real time, the following tasks:

1. Streak recognition: the 6000x4000 pixel images are processed in real time for identifying the moving line segments corresponding to LEO objects. The image processing algorithms must face the challenge of detecting faint targets, in the presence of clouds, or near the horizon where the background light is higher. In order to achieve the desired detection performance, we have combined motion based object detection with a Deep Learning based method and a Hough Line based method. To increase the sensitivity, we have also used raw camera data (14 bit per pixel), besides the 8 bit jpg data.

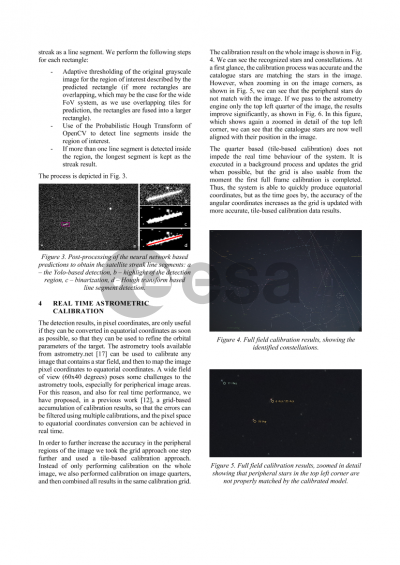

2. Real time astrometrical reduction: we have used a grid-based reduction system, calibrated as often as possible using the tools from Astrometry.net. In order to overcome the distortions of the wide FOV system, partial calibrations of multiple regions of the image are performed, and then combined (tiled) into a centralized calibration grid.

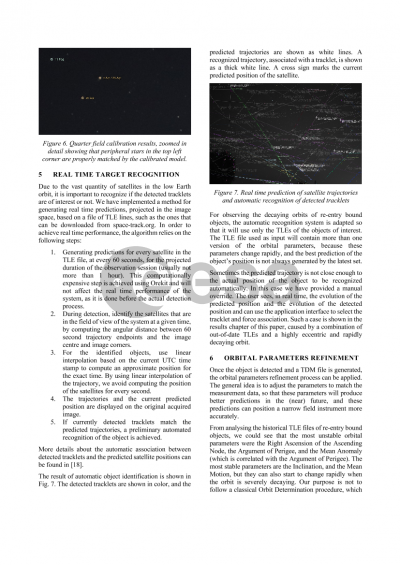

3. Real time object identification, based on the existing orbital parameters: using the Orekit library, the position of the objects of interest are predicted before the detection process starts, and then projected in the image space, where they are compared to the tracklets detected in real time. The matching is done mainly on trajectory speed and orientation, therefore an offset in position along the trajectory does not impede the identification of the object, allowing for large differences, specific to decaying orbits.

4. Real time orbital elements update for a more accurate, short term prediction: using the Extended Kalman Filter and the detected positions, we can update the parameters that are most likely to affect the prediction, the object’s Mean Anomaly (which mostly influences the Along Track Error) and the Right Ascension of the Ascending Node (which mostly influences the Cross Track Error). Even though more parameters may need to be updated, adjusting these two parameters will ensure that, for a short time, the predicted position of the object will match within the size of the field of view of the telescope.

The solution can be implemented using commercial DSLR cameras or specialized 16-bit cameras, and can easily be deployed along any narrow FOV instrument.

Preview