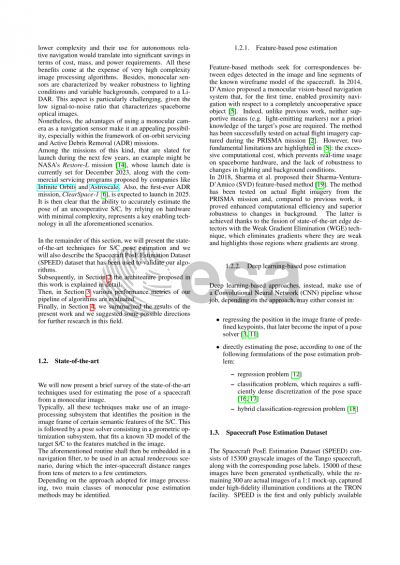

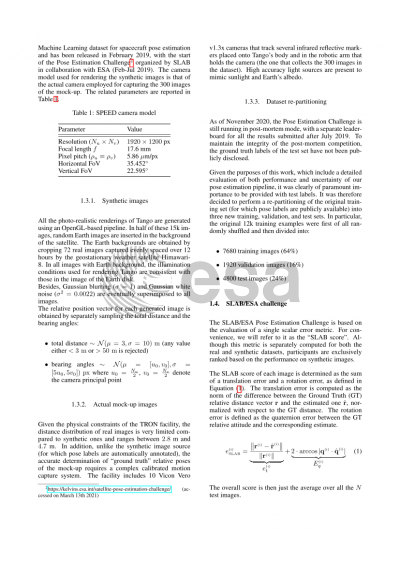

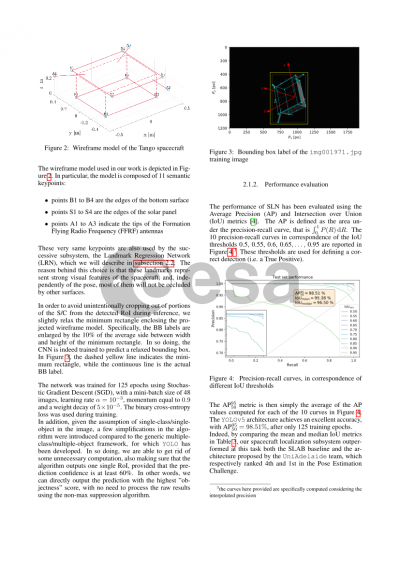

Document details

Abstract

The aim of this paper is to present the design and validation of a deep learning-based pipeline for estimating the pose of an uncooperative target spacecraft, from a single grayscale monocular image.

The possibility of enabling autonomous vision-based relative navigation, in close proximity to a non-cooperative space object, has recently gained remarkable interest in the space industry. In particular, such a technology would be especially appealing for Active Debris Removal (ADR) missions as well as in an on-orbit servicing scenario.

The use of a simple camera, compared to more complex sensors such as a LiDAR, has numerous advantages in terms of lower mass, volume and power requirements. This would clearly translate into a substantially cheaper chaser spacecraft, at the expense of increased complexity of the image processing algorithms.

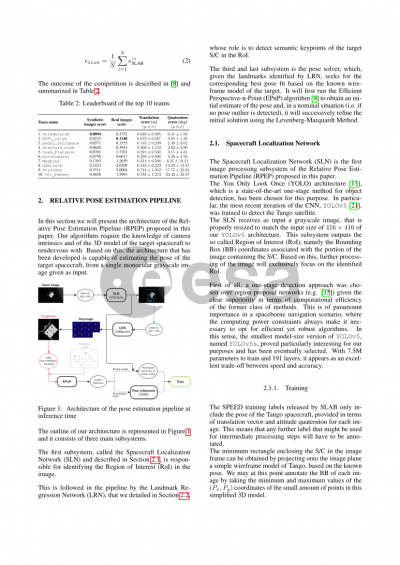

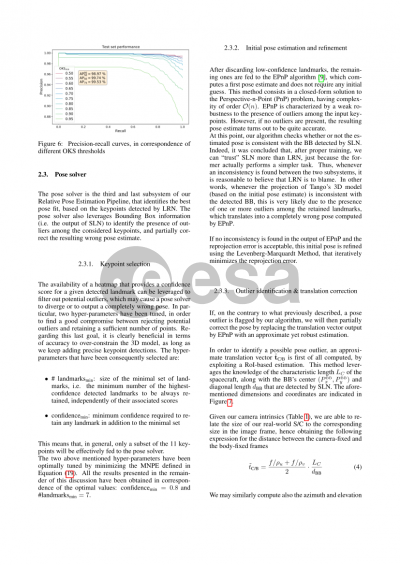

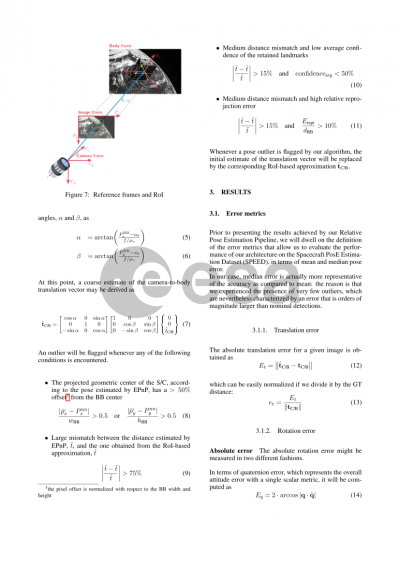

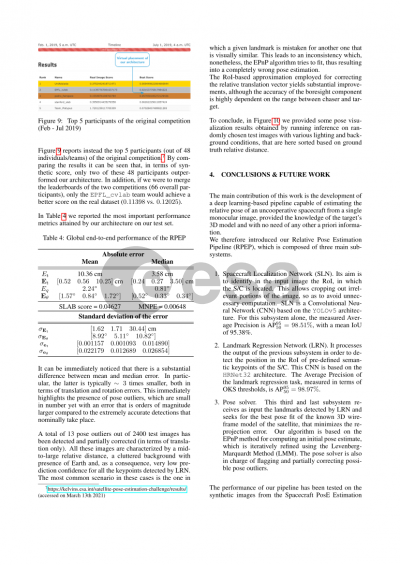

The Relative Pose Estimation Pipeline (RPEP) proposed in this work leverages state-of-the art Convolutional Neural Network (CNN) architectures to detect the features of the target spacecraft from a single monocular image. Specifically, the overall pipeline is composed of three main subsystems. The input image is first of all processed using an object detection CNN that localizes the portion of the image enclosing our target, i.e. the bounding box. This is followed by a second CNN that regresses the location of semantic keypoints of the spacecraft. Eventually, a geometric optimization algorithm exploits the detected keypoint locations to solve for the final relative pose, based on the knowledge of camera intrinsics and of a wireframe model of the target satellite.

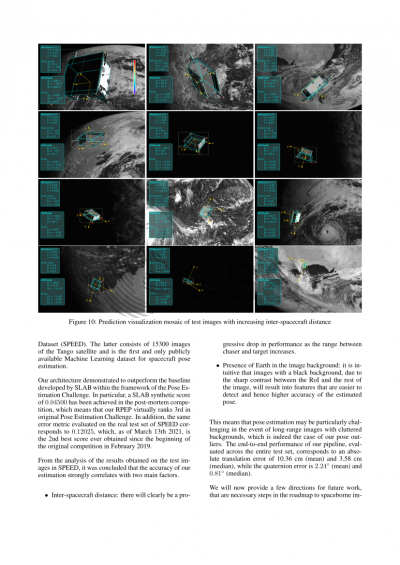

The Spacecraft PosE Estimation Dataset (SPEED), a collection of 15300 images of the Tango spacecraft released by the Space rendezvous LABoratory (SLAB), has been used for training the deep neural networks employed in our pipeline, as well as for evaluating performance and estimation uncertainty.

The RPEP here presented guarantees on SPEED a centimeter-level position accuracy and degree-level attitude accuracy, along with considerable robustness to changes in lighting conditions and in the background. In addition, our architecture also showed to generalize well to actual images, despite having exclusively been exposed to synthetic imagery during the training of CNNs.

Preview