Document details

Abstract

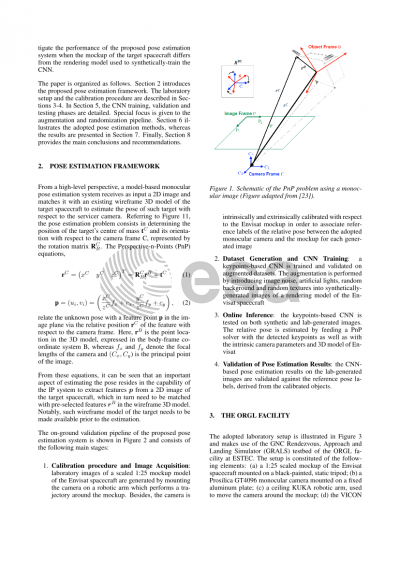

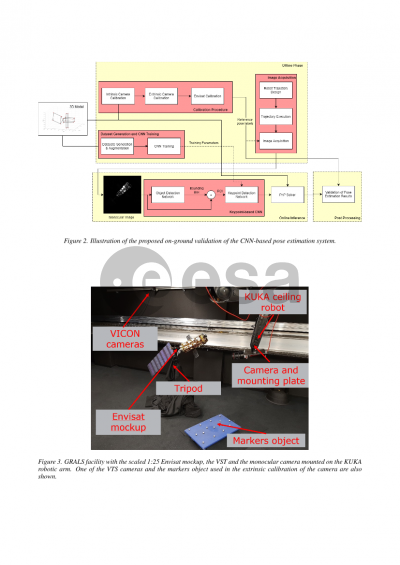

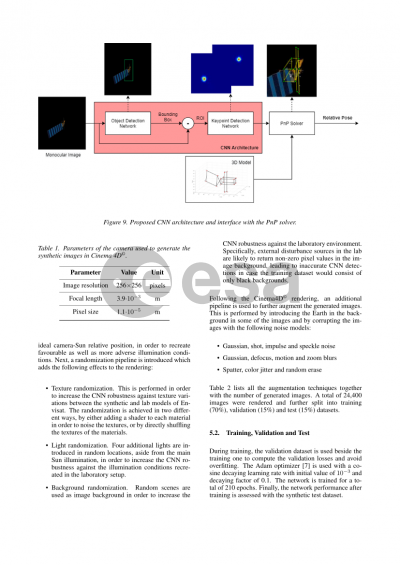

The estimation of the relative pose of an inactive spacecraft by an active servicer spacecraft is a critical task in the design of current and planned space missions, due to its relevance for close-proximity operations, such as In-Orbit Servicing and Active Debris Removal. Among all the challenges, the lack of available space images of the inactive satellite makes the on-ground validation of current monocular camera-based navigation systems a challenging task, mostly due to the fact that standard Image Processing (IP) algorithms, which are usually tested on synthetic images, tend to fail when implemented in orbit. Following the recent appearance of robust and accurate monocular pose estimation systems based on Convolutional Neural Networks (CNNs), this paper reports on the testing of a novel CNN-based pose estimation pipeline with realistic lab-generated 2D monocular images of the European Space Agency's Envisat spacecraft. Following the current need to bridge the reality gap between synthetic and orbital images, the main contribution of this work is to test the performance of CNNs trained on synthetic datasets with more realistic images of the target spacecraft. The validation of the proposed pose estimation system is assured by the introduction of a calibration framework, which ensures an accurate reference relative pose between the target spacecraft and the camera for each lab-generated image, allowing a comparative assessment at both keypoints detection and pose estimation level.

By creating a laboratory database of the Envisat spacecraft under space-like conditions, this work further aims at expanding the currently available lab-generated dataset of PRISMA’s Tango spacecraft as well as extending the current image acquisition procedures, in order to facilitate the establishment of a standardized on-ground validation procedure that can be used in different lab setups and with different target satellites.

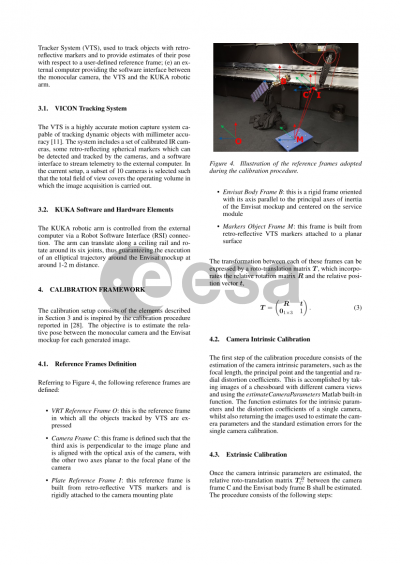

The lab-representative images of the Envisat are generated at the Orbital Robotics and GNC lab (ORGL) of ESA’s European Space Research and Technology Centre (ESTEC). The VICON system is used together with a KUKA robotic arm to respectively track and control the trajectory of the monocular camera around a scaled 1:25 mock-up of the Envisat spacecraft.

Preview