Document details

Abstract

Outline. We present the OpticalFence network concept aimed at providing low-cost optical observational data for objects in LEO (200-2000km).

Motivation. The goal of developing the OpticalFence system is to fill the gap between optical MEO/GEO monitoring and radar-based LEO observations with an robust and economical solution that is modular, expandable and able to provide not only angular and velocity information about the observed object, but also range.

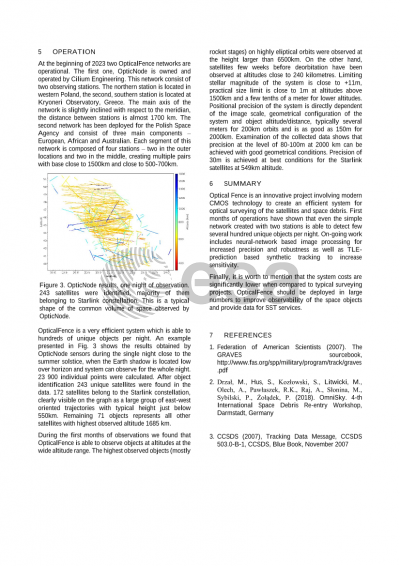

System overview. The designed system consists of multiple-station segments distributed around the globe along local meridians that provide 3D state and velocity vectors of objects crossing a common volume of space that are seen by at least two stations. The 3D vectors are obtained with optical triangulation. We present the theory behind the concept, simulation studies, actual implementation and conclusions drawn from the obtained observational results.

Hardware. The OpticalFence observing stations use mostly off-the-shelf components, are virtually maintenance free and contain almost no moving parts. They are optimized for deployment in remote areas. The network concept is built around edge and cloud computing paradigms. Data streams from the cameras are processed in real time with heavily optimized on-board software. A key feature of the system is time synchronization – all cameras operating in the network are triggered by an external device that is GPS-synchronized, guaranteeing that all image sequences are taken at the same moment of time, down to microsecond precision.

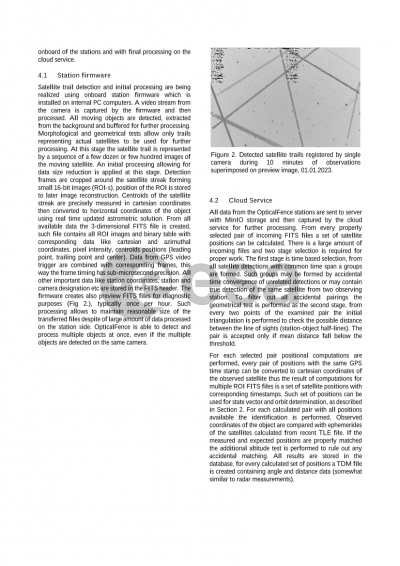

Software. Image-related data processing is done on-board the devices. Once a moving object is detected, a small region of interest including the object and its immediate surroundings are packed into a binary file and uploaded to the cloud or further data synthesis. There, all detections are cross-correlated and events that satisfy certain matching criteria are merged using the triangulation technique to provide final position and velocity measurements in space. Finally, detections are cross-referenced against a known catalogue and identified, where possible. On-going work includes neural-network based image processing for increased precision and robustness, as well as TLE-prediction based synthetic tracking to increase sensitivity.

Summary. The presented system is modular, easily upgradeable – in terms of both software and hardware and provides a novel approach SST data delivery for LEO.

Preview