Document details

Abstract

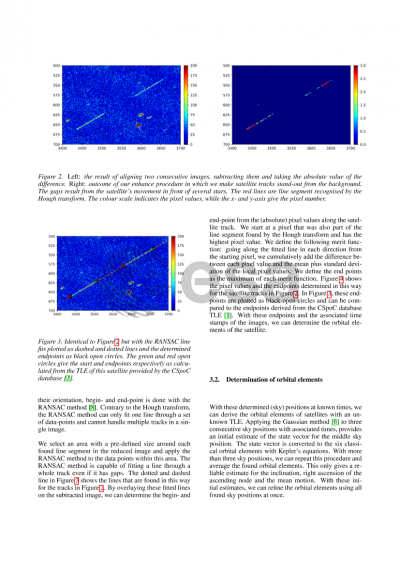

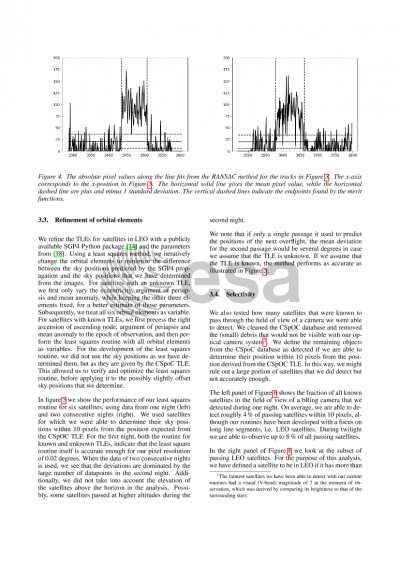

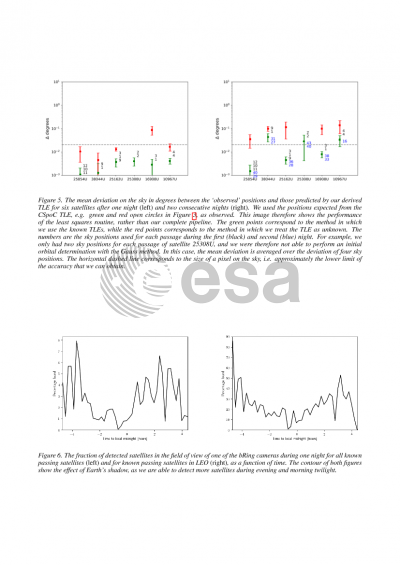

We have used an existing, robotic, multi-lens, all-sky camera system, coupled to a dedicated data reduction pipeline, to automatically determine orbital parameters of (mainly) satellites in Low Earth Orbit (LEO). Each of the fixed cameras has a Field of View of 53 x 74 degrees, while the five cameras combined cover the entire sky down to 20 degrees from the horizon. Each of the cameras takes an image every 6.4 seconds, after which the images are automatically processed and stored. We have developed an automated data reduction pipeline that recognizes satellite tracks, to pixel level accuracy (~ 0.02 degrees), and uses their endpoints to determine the orbital elements in the form of standardized Two Line Elements (TLEs). The routines, that use existing algorithms such as the Hough transform and the Ransac method, can be used on any optical dataset.

For a satellite with an unknown TLE, we need at least two overflights to accurately predict the next one. Known TLEs are refined with every pass to, for example, improve collision detections or orbital decay predictions. For our current data analysis we have been focusing on payloads in LEO, where we are able to recover between 50% and 80% of the overpasses during twilight. We have been able to detect LEO satellites down to 7th magnitude. Higher objects, up to geosynchronous orbit, were observed, but are currently not being automatically picked up by our reduction pipeline. In a follow-up project we will extend the capabilities to higher orbits and fainter objects by improving our data reduction and our instrumental set-up.

Preview